I Love Keras EarlyStopping

Training neural networks is an iterative process that can be very  timeconsuming. Rarely do you get the optimal model on your first training run. You try a set of hyperparameters then evaluate how well the model performs. Then you do it again with different hyperparameters. Rinse and repeat, often by using grid search.

timeconsuming. Rarely do you get the optimal model on your first training run. You try a set of hyperparameters then evaluate how well the model performs. Then you do it again with different hyperparameters. Rinse and repeat, often by using grid search.

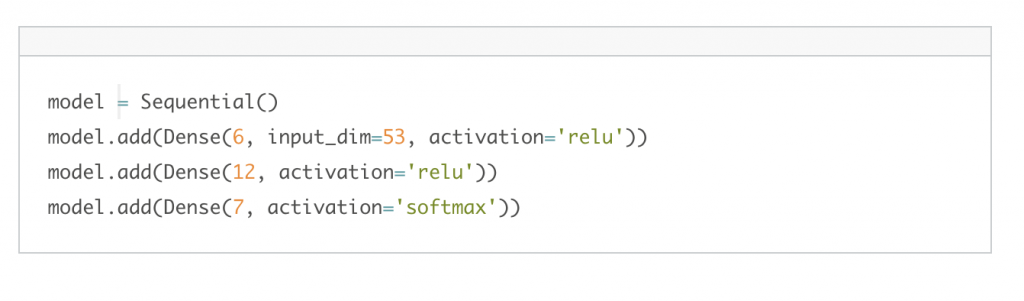

One of the great features of Tensorflow 2.x is an API called Keras. It takes the potentially massive amounts of code that are needed to build neural networks and wraps it into a nice, discrete interface. For example, to build an artificial neural network with 53 inputs, 2 hidden layers (one with 6 neurons and the other with 12), and 7 output classes, you can use something like the following code. That's it. 4 lines of code! It takes quite a bit more with standard TensorFlow.

One of the great features of Tensorflow 2.x is an API called Keras. It takes the potentially massive amounts of code that are needed to build neural networks and wraps it into a nice, discrete interface. For example, to build an artificial neural network with 53 inputs, 2 hidden layers (one with 6 neurons and the other with 12), and 7 output classes, you can use something like the following code. That's it. 4 lines of code! It takes quite a bit more with standard TensorFlow.

Back to training. When training, you need to specify how many epochs you want to train for. An epoch is "one pass over the entire dataset, used to separate training into distinct phases." Sometimes you need to run 100 epochs to properly train your model, sometimes 1,000 or more. But what if you could have the training run automatically stop (not run any more epochs of training) when, according to a metric you set, the model is no longer continuing to improve? Keras has a nice class called "EarlyStopping" that does just that. It "stops training when a monitored metric has stopped improving."

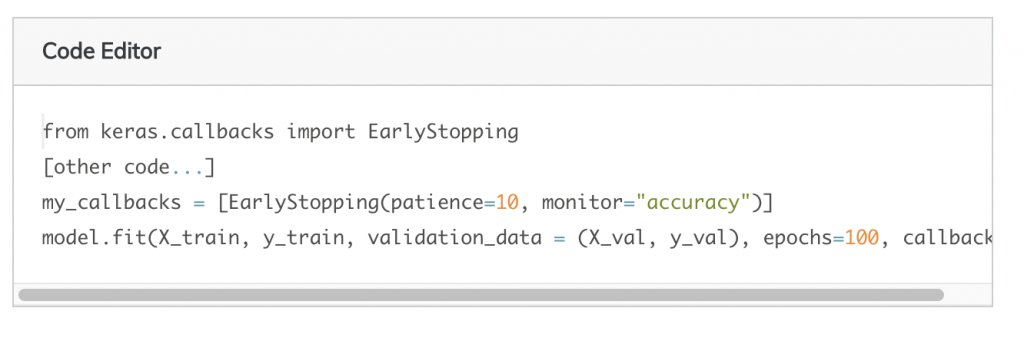

EarlyStopping falls into a group of objects known as callbacks that are specified when the model is trained using model.fit(). By setting the EarlyStopping value, you tell the model to quit training when a certain metric has not improved for a specified number of epochs. So maybe after 10 epochs of no improvement, stop the training. If you have specified the training to run for 100 epochs and it can stop at 50 epochs due to no improvement, you have saved 50% of the time you would have needed for training. Saving time is always a benefit! Plus, you can help avoid overfitting.

Here's how it works. First, you import EarlyStopping from keras.callbacks. Then specify a value for the "patience" hyperparameter. This is how many epochs that must be trained without improvement for the training to stop. Continue by specifying which metric to evaluate after each epoch of training. Finally, tell your model to use the EarlyStopping values when training. It's that easy. Below is code for running 100 epochs, but stopping after the training accuracy does not improve after 10 epochs.

Several other parameters can be specified for EarlyStopping, such as min_delta and verbose. Check out the documentation page for details. I'll discuss some of the other callbacks that are available in future posts.