RE-WORK Deep Learning Summit: Benchmarking Large Image Datasets

At the Re-Work Deep Learning Summit in San Francisco in January, I learned about some new, very large image datasets. I have heard of and used the Cifar 10 and 100 datasets, as well as ImageNet. However, the “80 Million Tiny Images Dataset” and the “CelebA Dataset” were discussed. These were new to me. All of these are very large collections of images that are labeled for classification tasks.

I often benchmark servers against each other to figure out which perform best in different situations. Since computer vision is such a big topic today, benchmarks on large image datasets are particularly useful and informative. Even a 10% increase in speed can have a large impact on business.

In the next few weeks, I will be comparing the different buses in the IBM POWER9 AC922 server versus several Intel-based servers. All servers will have the same NVIDIA V100 GPUs and similar CPUs. However, the servers have different buses that move data at different speeds between the CPU and GPU. The faster the bus, the quicker data can be moved from the CPU to GPU and vice versa. The AC922 uses NVIDIA's NVLink technology between the CPU and GPU while Intel-based servers use PCIe Gen3. The NVLink bus in the AC922’s moves data at 150GB/sec versus the PCIe Gen3 bus in Intel-bus server which moves data at 32GB/sec. The question I am trying to answer is if the image classification tasks will run faster on the AC922 since the bus speed is approximately 5x faster than the Intel-based servers. Benchmarks from other groups using different types of dataset have shown this is the case, but I do not think this has been done with these large datasets of very small images. After the bus benchmarks, I will be testing disk I/O, particularly the IBM Elastic Storage Server (ESS) using Spectrum Scale against other external storage systems. The results should be published in the next few weeks. I am excited to see how they come out.

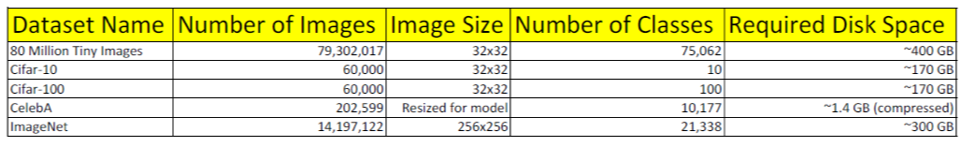

The details on the different datasets are below.